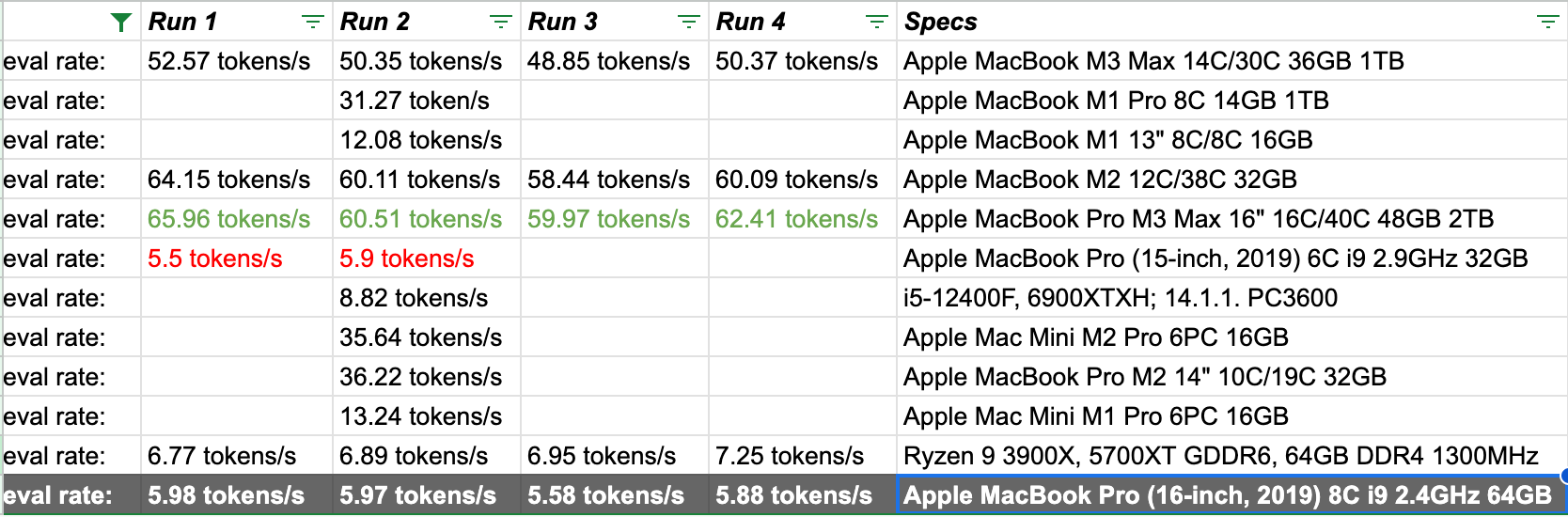

Ollama Performance Comparison Chart

I stumbled upon Ollama while looking for ways to run large language models (LLMs) locally for research at work some time last year during the initial explosion of the interest in ChatGPT.

Being a long time Linux dabbler all my GPU have almost always been from Team Red (AMD) unless the hardware happens to be donated or the choice being out of my hands. I chalk this to all the driver difficulties for Linux. Torvalds made his feelings known about the hardware situation in 2012. It took a laptop refresh cycle in 2014 before he reversed that opinion.

Why am I mentioning this? Well, its time for another laptop refresh and I'm coming from a MacBook Pro (16-inch, 2019) kitted with 64GB DDR4 RAM running at 2666MHz for onboard memory, as well as, an AMD Radeon Pro 5500M with 4GB of GDDR6 memory that auto switches with an Intel UHD Graphics 630.

Its now just past the first quarter of 2024 and all current generation MacBook M3's are running Apple Silicon (their version of the ARM architecture). There are even rumors circulating from the other day of the possibility of an M3 Ultra because of how slightly neutered the M3 lineup is compared to the previous generation M2 Ultras. It runs neither of the products from AMD or Nvidia but instead uses an integrated GPU of either 30 or 40-cores and shares memory with the CPU itself. There are other comparisons of the CPU out there, I'm going to focus on my tiny little world use case to help me decide on what M3 size and model I should jump for.

This article was inspired by the Ars Technica forum topic: The M3 Max/Pro Performance Comparison Thread

The test is simple, just run this singe line after the initial installation of Ollama and see the performance when using Mistral to ask a basic question:

ollama run mistral "Why is the sky blue?" --verbose

Ollama now allows for GPU usage. However, none of my hardware is even slightly in the compatibility list; and the publicly posted threat reference results were before that feature was released.

In the above results, the last two-(2) rows are from my casual gaming rig and the aforementioned work laptop. Red text is the lowest, whereas, Green is for the highest recorded score across all runs.

There is a pronounced stark performance difference from traditional CPUs (Intel or AMD) simply because we're talking of computational units. In a GPU the cores number in the thousands instead of single or double digits.

What is noticeable is that a local LLM can definitely take advantage of Apple Silicon. Also, while CPU core counts are important the number of GPU cores and the headroom from shared memory allow for more effective results.

Also, when selecting between slightly more cores vs memory above 24GB, one has another thing to consider. While more GPU core count allows for more efficient evaluation, higher GPU memory (shared or otherwise) makes it possible to load and run higher than 7B parameter versions of most LLMs currently available. Otherwise, you're going to need to use a quantized version (read as: less precise) in order to get going.

Well, those are my initial thoughts for now. If (and or when) the laptop refresh even happens for me, then I might just update this article with measurements. Until then, see you in the next one.